Hi This is Run Peng 彭润. You can call me Run or Roihn. I am a second-year Ph.D. candidate in Computer Science and Engineering, advised by professor Joyce Chai. My primary research focus is to bridge the gap between humans and AI agents.

Agents need to be able to perceive, to reason, and to interact with the world and humans. I’m thrilled to explore agent behaviors, human behaviors, and human-AI interaction when humans are cosituated with the agents. From cognitive perspective, as we figure out the commonalities and distinctions between human and agent behaviors, I believe we can unlock new insights into the nature of understanding itself, ultimately guiding us toward the development of more general AI agents.

🔥 News

- 2025.09: 😊 I will be the student poster chair for this year’s Michigan AI Symposium. Please consider to register and bring your poster there!

- 2025.07: 🎉 Two papers are accepted at CoLM 2025! Papers have been released!

- 2025.06: 🎉 I successfully passed the qualification exam and became a Ph.D. candidate!

- 2024.12: ❓ My photo occasionally appeared in CSE 2024 Annual Report of Umich. (?)

- 2024.10: 🎉 One paper accepted at EMNLP 2024 findings! See you at Miami!

- 2024.09: 🎉 I will continue working with Professor Joyce Chai at SLED lab as a Ph.D. student!

📝 Publications

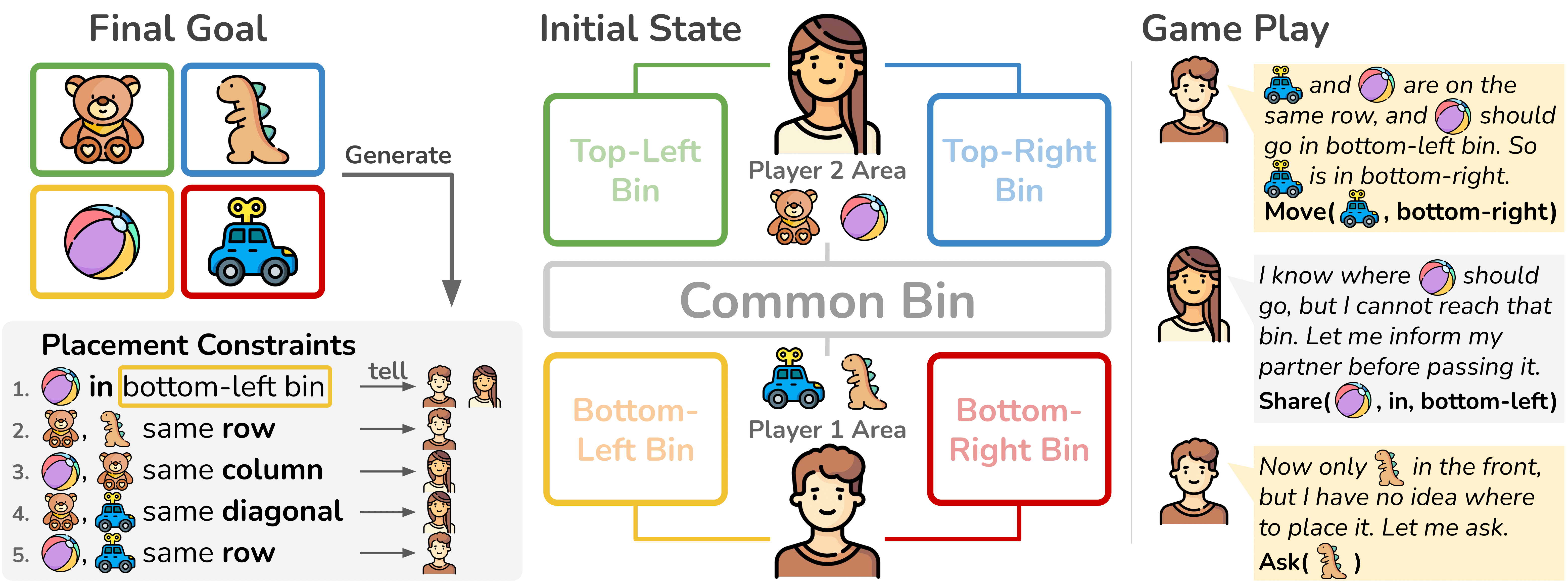

Communication and Verification in LLM Agents towards Collaboration under Information Asymmetry

Run Peng*, Ziqiao Ma*, Amy Pang, Sikai Li, Zhang Xi-Jia, Yingzhuo Yu, Cristian-Paul Bara, Joyce Chai

[Paper] [Code] [Dataset] [Checkpoint]

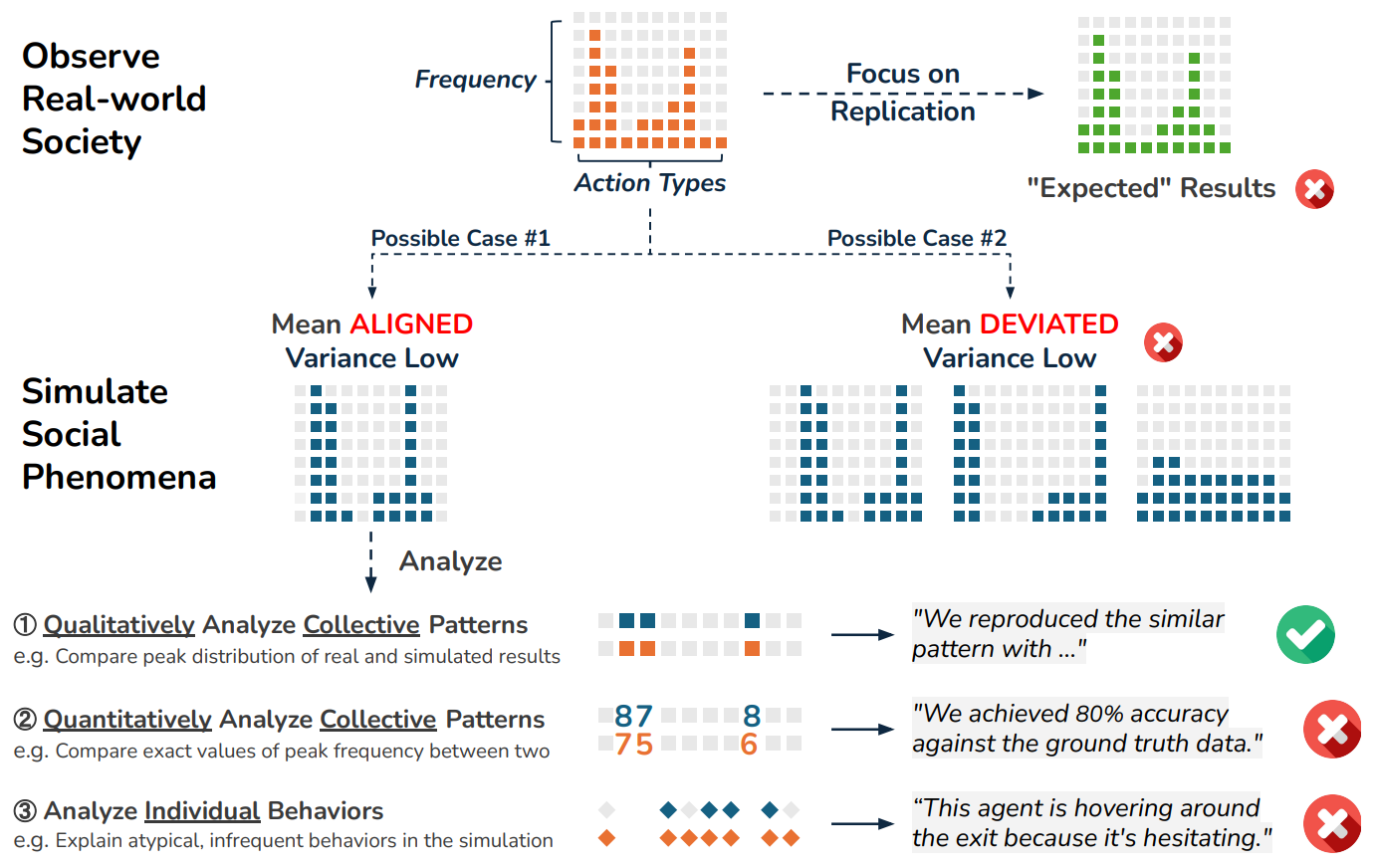

LLM-Based Social Simulations Require a Boundary

Zengqing Wu, Run Peng, Takayuki Ito, Chuan Xiao

[Paper]

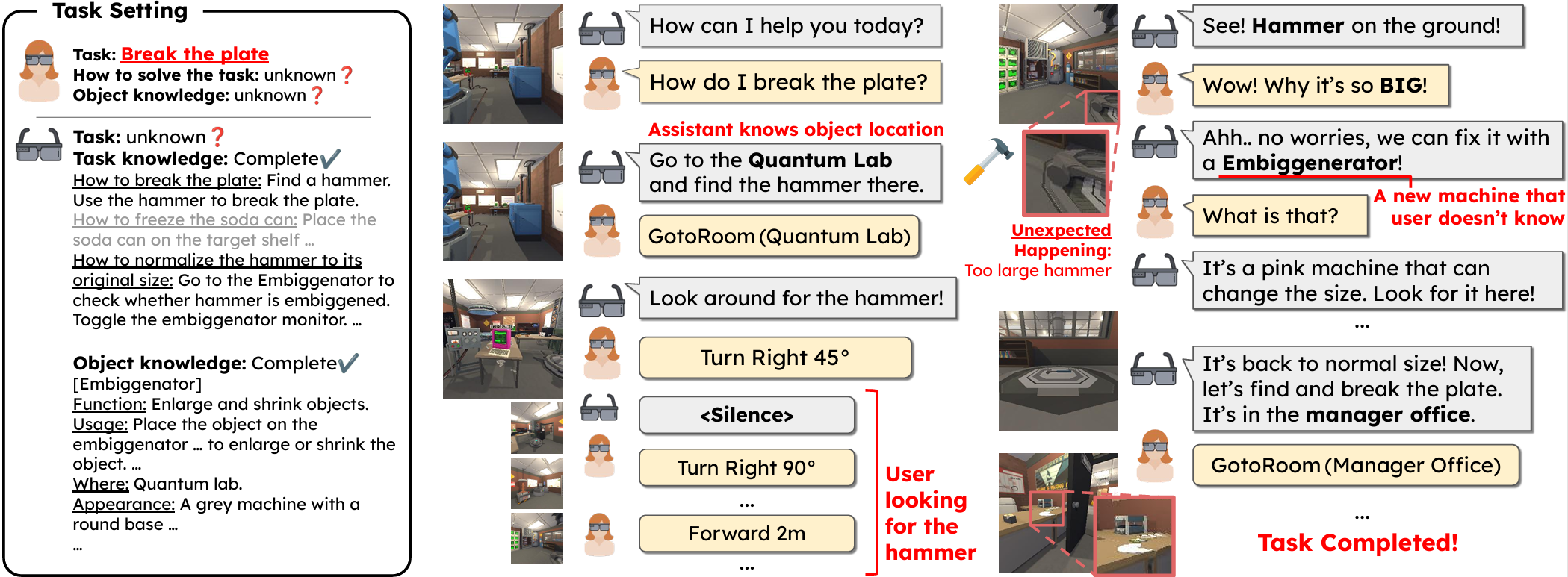

Bootstrapping Visual Assistant Development with Situated Interaction Simulation

Yichi Zhang, Run Peng, Lingyun Wu, Yinpei Dai, Xuweiyi Chen, Qiaozi Gao, Joyce Chai1

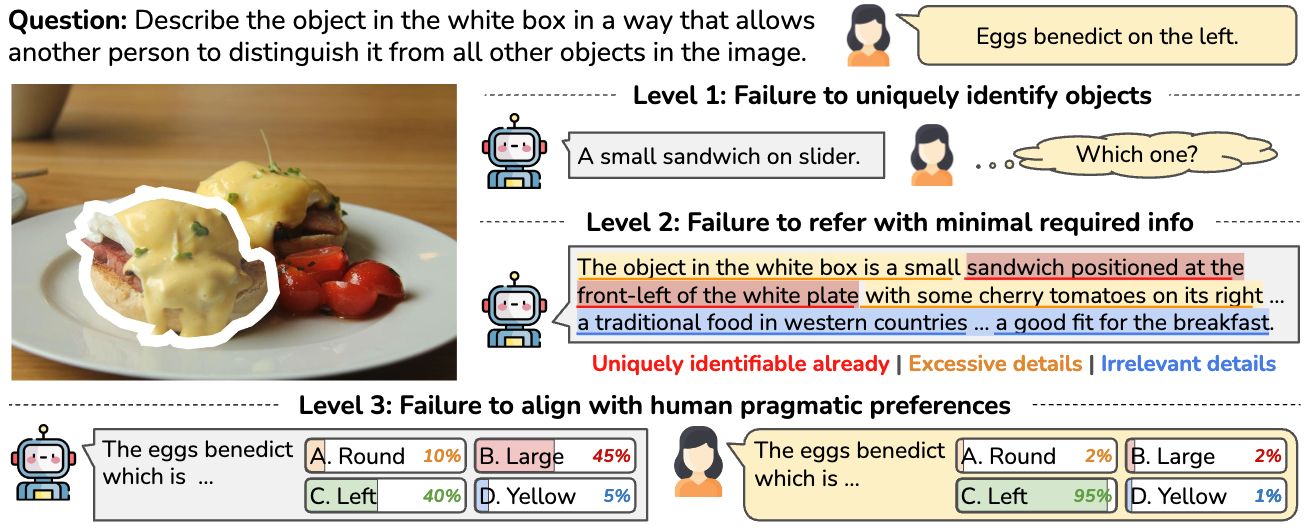

Vision-Language Models Are Not Pragmatically Competent in Referring Expression Generation

Ziqiao Ma, Jing Ding, Xuejun Zhang, Dezhi Luo, Jiahe Ding, Sihan Xu, Yuchen Huang, Run Peng, Joyce Chai

[Project] [Paper] [Code] [RefOI Dataset] [RefOI-TLHF Dataset]

Towards A Holistic Landscape of Situated Theory of Mind in Large Language Models

Ziqiao Ma, Jacob Sansom, Run Peng, Joyce Chai

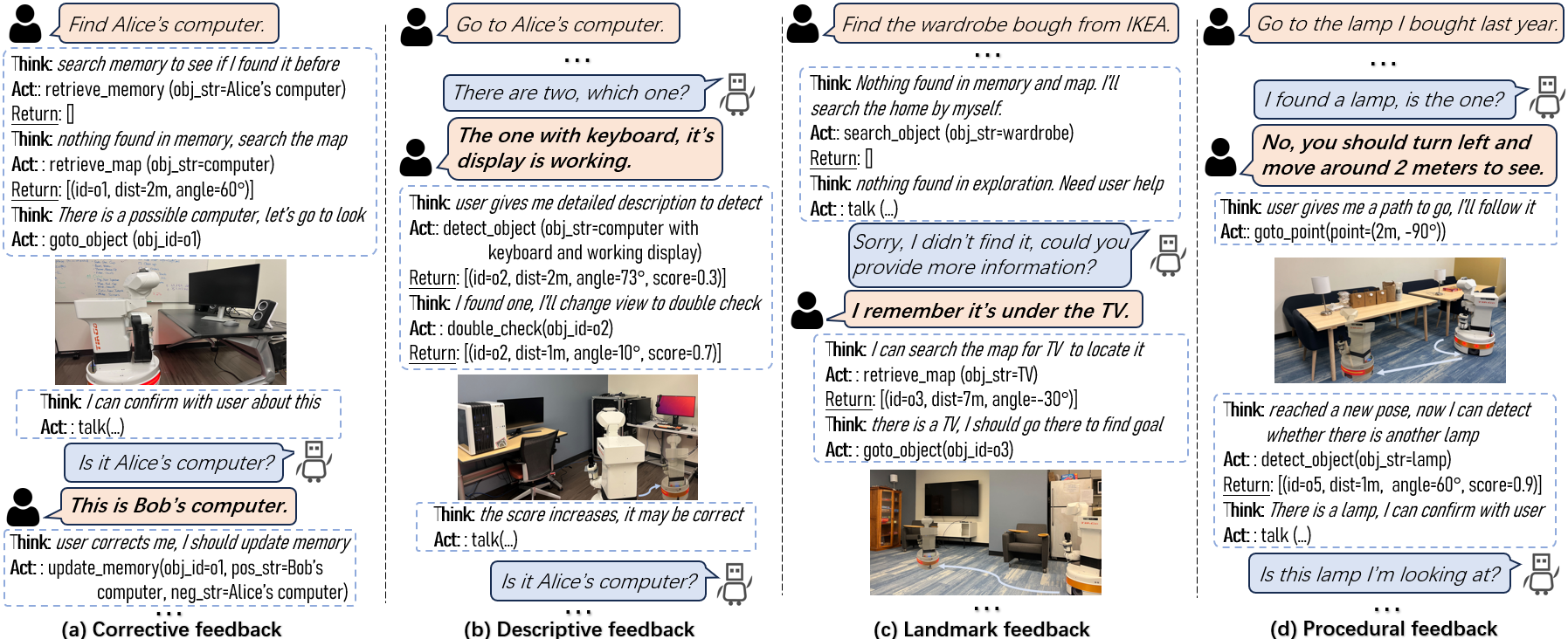

Think, Act, and Ask: Open-World Interactive Personalized Robot Navigation

Yinpei Dai, Run Peng, Sikai Li, Joyce Chai

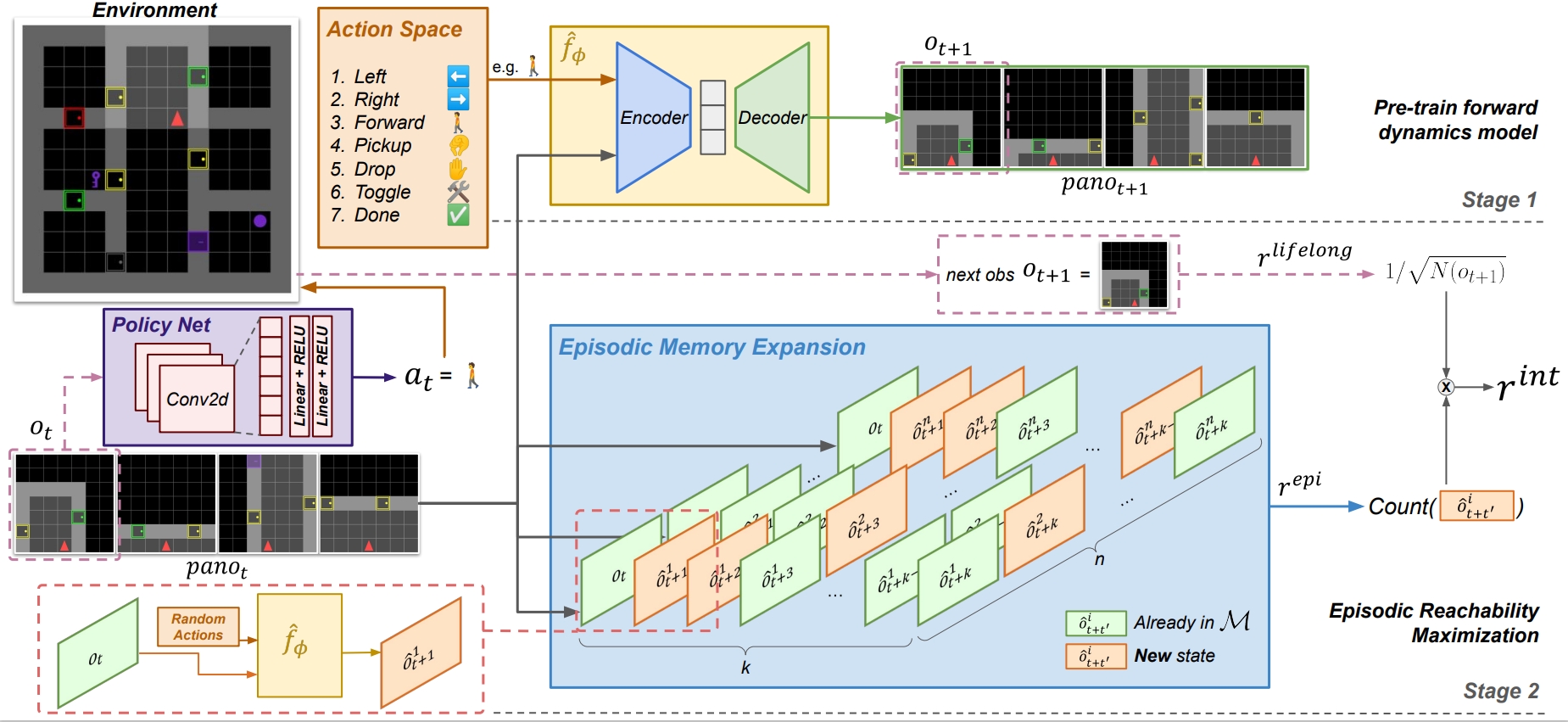

Go Beyond Imagination: Maximizing Episodic Reachability with World Models

Yao Fu, Run Peng, Honglak Lee

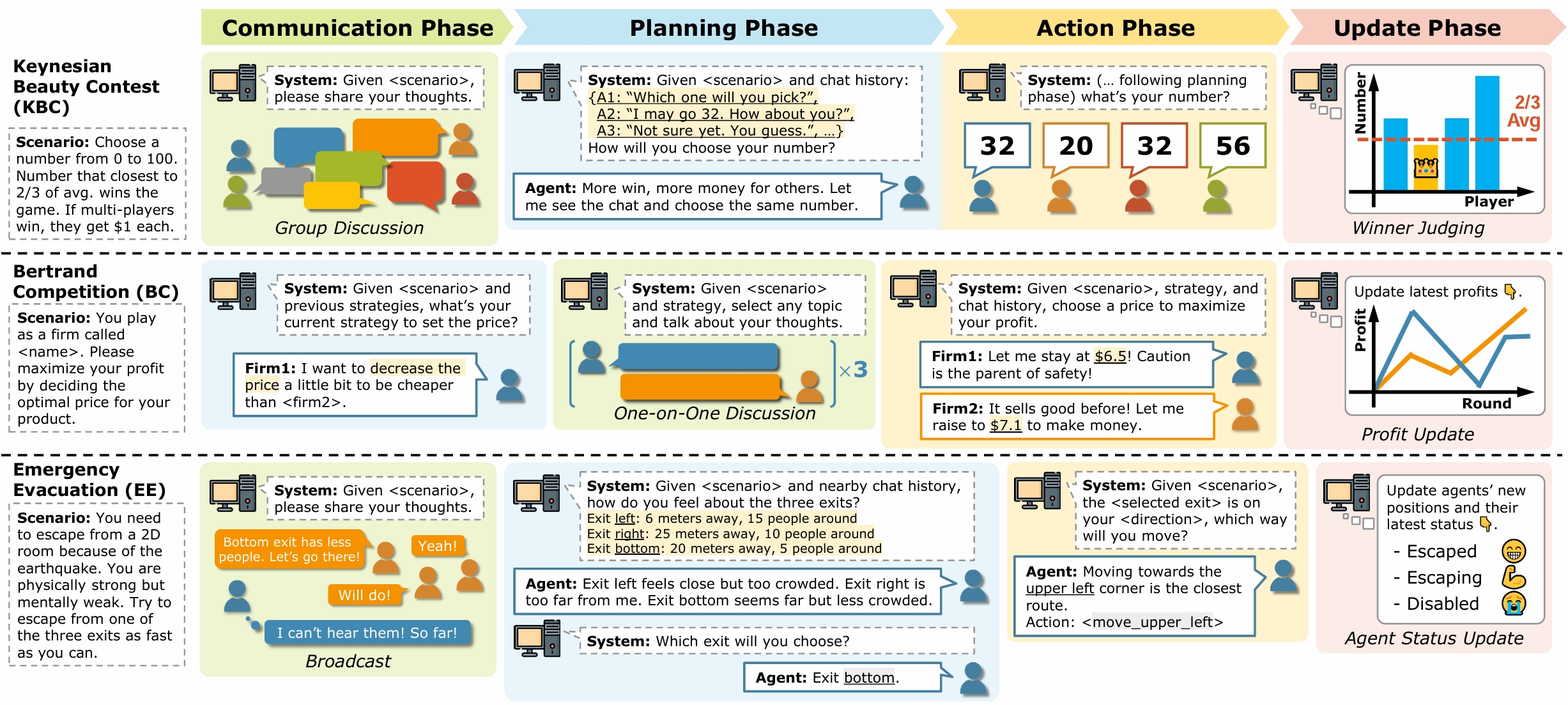

Shall We Team Up: Exploring Spontaneous Cooperation of Competing LLM Agents

Zengqing Wu*, Run Peng*, Shuyuan Zheng, Qianying Liu, Xu Han, Brian I. Kwon, Makoto Onizuka, Shaojie Tang, Chuan Xiao

Smart Agent-Based Modeling: On the Use of Large Language Models in Computer Simulations

Zengqing Wu, Run Peng, Xu Han, Shuyuan Zheng, Yixin Zhang, Chuan Xiao

Learning Exploration Policies with View-based Intrinsic Rewards, Yijie Guo*, Yao Fu*, Run Peng, Honglak Lee, In NeurIPS 2022 DRL Workshop

📖 Educations

- Now, Ph.D. in Computer Science & Engineering, University of Michigan

- 2024, MSE in Computer Science & Engineering, University of Michigan

- 2022, BSE in Computer Science & Engineering, University of Michigan

💻 Internships & Research Experience

- 2023.09 - 2023.12, LG AI Research, Ann Arbor. Mentored by Lajanugen Logeswaran and Sungryull Sohn.

- 2021.10 - 2023.08, Lee Lab, University of Michigan. Advised by professor Honglak Lee, mentored by Yijie Guo and Violet Yao Fu.

- 2022.12 - Now, SLED Lab, University of Michigan.

👔 Service

- Paper Review:

- Conference: (AI) NeurIPS, ICLR, ICML, AISTATS (NLP) ARR, EMNLP, NAACL, COLM, (HCI) HRI, CHI

- Journal: Journal of Simulation

- Social Activity:

- Poster Student Co-Chair, Michigan AI symposium 2025

- Explore Grad Studies Volunteer, University of Michigan